Process

Concept

A process is an instance of a

program in execution.

Process – a program in execution;

process execution must progress in sequential fashion.

A process includes:

1.

program counter

2.

Stack

3.

data section

Process State

- Processes may be in one of 5 states, as shown in Figure 3.2 below.

- New - The process is in the stage of being created.

- Ready - The process has all the resources available that it needs to run, but the CPU is not currently working on this process's instructions.

- Running - The CPU is working on this process's instructions.

- Waiting - The process cannot run at the moment, because it is waiting for some resource to become available or for some event to occur. For example the process may be waiting for keyboard input, disk access request, inter-process messages, a timer to go off, or a child process to finish.

- Terminated - The process has completed.

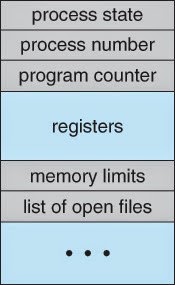

Process Control Block

For each process there is a Process Control Block, PCB, which stores the following ( types of ) process-specific information, as illustrated in Figure 3.1. ( Specific details may vary from system to system. )- Process State - Running, waiting, etc., as discussed above.

- Process ID, and parent process ID.

- CPU registers and Program Counter - These need to be saved and restored when swapping processes in and out of the CPU.

- CPU-Scheduling information - Such as priority information and pointers to scheduling queues.

- Memory-Management information - E.g. page tables or segment tables.

- Accounting information - user and kernel CPU time consumed, account numbers, limits, etc.

- I/O Status information - Devices allocated, open file tables, etc.

(Process control block)

Diagram

showing CPU switch from process to process

Threads

- Modern systems allow a single process to have multiple threads of execution, which execute concurrently.

Process

Scheduling

- The two main objectives of the process scheduling system are to keep the CPU busy at all times and to deliver "acceptable" response times for all programs, particularly for interactive ones.

- The process scheduler must meet these objectives by implementing suitable policies for swapping processes in and out of the CPU.

- ( Note that these objectives can be conflicting. In particular, every time the system steps in to swap processes it takes up time on the CPU to do so, which is thereby "lost" from doing any useful productive work. )

Scheduling Queues

- All processes are stored in the job queue.

- Processes in the Ready state are placed in the ready queue.

- Processes waiting for a device to become available or to deliver data are placed in device queues. There is generally a separate device queue for each device.

- Other queues may also be created and used as needed.

Schedulers

- A long-term scheduler is typical of a batch system or a very heavily loaded system. It runs infrequently, ( such as when one process ends selecting one more to be loaded in from disk in its place ), and can afford to take the time to implement intelligent and advanced scheduling algorithms.

- The short-term scheduler, or CPU Scheduler, runs very frequently, on the order of 100 milliseconds, and must very quickly swap one process out of the CPU and swap in another one.

- Some systems also employ a medium-term scheduler. When system loads get high, this scheduler will swap one or more processes out of the ready queue system for a few seconds, in order to allow smaller faster jobs to finish up quickly and clear the system.

- An efficient scheduling system will select a good process mix of CPU-bound processes and I/O bound processes.

Queueing-diagram representation of

process scheduling:-

CPU Scheduling

CPU

Scheduling is the process used to maximize the CPU utilization.

CPU Scheduling is done in following

circumstances:

- When process switches from the running stage to waiting state

- When process switches from the running stage to ready state

- When process switches from the waiting stage to ready state

CPU Scheduling is of two types

depending on nature

- Preemptive Scheduling

- Non –Preemptive Scheduling

Preemptive Scheduling

Allows the process to be interrupted

in the midst of its execution.

Non –Preemptive Scheduling

Doesnot allows the process to be

interrupted in the midst of its execution.

Note: The main aim of the scheduling is to maximize the CPU

When two process are executed simultaneously they are called concurrent process.

Scheduling

algorithms

1.First come, First served (FCFS)

In this scheduling algorithm, the

process which comes first are executed first.

Process Burst Time

P1

24

P2

3

P3

3

Suppose

that the processes arrive in the order: P1 , P2 , P3

The Gantt

Chart for the schedule is:

|

P1

|

P2

|

P3

|

0

24

27

30

Waiting time for P1 = 0; P2 = 24; P3 = 27

Average waiting time: (0 + 24 + 27)/3 = 17

Suppose

that the processes arrive in the order

P2

, P3 , P1 .

The Gantt

chart for the schedule is:

|

P2

|

P3

|

P1

|

0

3

6

30

Waiting time for P1 = 6; P2 = 0; P3 = 3

Average waiting time: (6 + 0 + 3)/3 = 3

2. Shortest-Job-First

(SJF) Scheduling

In

this scheduling algorithm, the process with short execution time will be

executed first.

Process Arrival

Time Burst Time

P1

0.0

7

P2

2.0

4

P3

4.0

1

P4

5.0

4

SJF (non-preemptive)

|

P1

|

P3

|

P2

|

P4

|

0

7

8

12

16

Average waiting time = [0

+(8-2)+(7-4) +(12-5)] /4 =4

Example

of Preemptive SJF

Proces

Arrival Time

Burst Time

P1

0.0

7

P2

2.0

4

P3

4.0

1

P4

5.0

4

SJF (preemptive)

|

P1

|

P2

|

P3

|

P2

|

P4

|

P1

|

0

2

4

5

7

11

16

Average waiting time = (9 + 1

+ 0 +2)/4 =3

3.Priority Scheduling

In this scheduling algorithm, the process with highest priority process will be executed first, then second highest priority process will be executed, so on at last process with least priority will be executed.

|

Process

|

Burst Time

|

Priority

|

|

P1

|

19

|

2

|

|

P2

|

3

|

1

|

|

P3

|

5

|

3

|

If the order of the arrival is like

in the order shown in the above table, then inorder to schedule using SJF,

first Gantt is plotted.

|

Gantt

Chart

|

||

|

P2

|

P1

|

P3

|

Gantt chart denotes the sequence of

the exectution of the process. Here sequence of execution is P2,P1,P3.

Waiting time of the process are

shown below

P1-----3

P2-----0

P3-----22

Thus, average wating time will be

average waiting waiting time=

(3+0+22)/3=8.3

4.Round Robin Scheduling

Round robin scheduling is similar to FCFS scheduling, except that CPU

bursts are assigned with limits called time quantum.

Example of RR with Time Quantum = 4

Process Burst Time

P1

24

P2

3

P3

3

The Gantt chart is:

|

P1

|

P2

|

P3

|

P1

|

P1

|

P1

|

P1

|

P1

|

0

4

7

10

14

18

22

26 30

Average waiting time

= [(30-24)+4+7]/3 = 17/3 =5.66

Multiple-Processor

Scheduling

- When multiple processors are available, then the scheduling gets more complicated, because now there is more than one CPU which must be kept busy and in effective use at all times.

- Load sharing revolves around balancing the load between multiple processors.

- Multi-processor systems may be heterogeneous, ( different kinds of CPUs ), or homogenous, ( all the same kind of CPU ). Even in the latter case there may be special scheduling constraints, such as devices which are connected via a private bus to only one of the CPUs.

Process Synchronization:

Process synchronization refers to the idea that

multiple processes are to join up or handshake at a certain point, in order to

reach an agreement or commit to a certain sequence of action.Thread

synchronization or serialization, strictly defined, is the application of

particular mechanisms to ensure that two concurrently-executing threads or processes do not execute specific

portions of a program at the same time. If one thread has begun to execute a

serialized portion of the program, any other thread trying to execute this

portion must wait until the first thread finishes. Synchronization is used to

control access to state both in small-scale multiprocessing systems -- in

multithreaded environments and multiprocessor computers -- and in distributed

computers consisting of thousands of units -- in banking and database systems,

in web servers, and so on.

Critical Section

- set of instructions that must be controlled so as to allow exclusive access to one process

- rarely: access to the critical section is limited to n processes instead of one process

- execution of the critical section by processes is mutually exclusive in time

Solution to the Critical Section Problem must meet three conditions...

- mutual exclusion:

if process

is

executing in its critical section, no other process is executing in its

critical section

is

executing in its critical section, no other process is executing in its

critical section - progress: if no process is executing in its critical section and there exists some processes that wish to enter their critical sections, then only those processes that are not executing in their remainder section can participate in the decision of which will enter its critical section next, and this decision cannot be postponed indefinitely

- if no process is in critical section, can decide quickly who enters

- only one process can enter the critical section so in practice, others are put on the queue

- bounded waiting: there must exist a bound on the number of times that other processes are allowed to enter their critical sections after a process has made a request to enter its critical section and before that request is granted

- The wait is the time from when a process makes a request to enter its critical section until that request is granted

- in practice, once a process enters its critical section, it does not get another turn until a waiting process gets a turn (managed as a queue)

Semaphore:-

a semaphore is a variable or abstract data type that is used for controlling

access, by multiple processes, to a common resource in a parallel programming or a multi user

environment.

A semaphore is a protected variable

whose value can be accessed and altered only by the operations P and V and

initialization operation called 'Semaphoiinitislize'.

Binary Semaphores can assume only

the value 0 or the value 1 counting semaphores also called general semaphores

can assume only nonnegative values.

The P

(or wait or sleep or down) operation on semaphores S, written as P(S) or wait (S), operates as follows:

P(S):

IF S > 0

THEN S := S - 1

ELSE (wait on S)

THEN S := S - 1

ELSE (wait on S)

The V

(or signal or wakeup or up) operation on semaphore S, written as V(S) or signal (S), operates as follows:

V(S):

IF (one or more process are waiting on S)

THEN (let one of these processes proceed)

ELSE S := S +1

THEN (let one of these processes proceed)

ELSE S := S +1

Operations P and V are done as

single, indivisible, atomic action. It is guaranteed that once a semaphore

operations has stared, no other process can access the semaphore until

operation has completed. Mutual exclusion on the semaphore, S, is enforced within

P(S) and V(S).

If several processes attempt a P(S)

simultaneously, only process will be allowed to proceed. The other processes

will be kept waiting, but the implementation of P and V guarantees that

processes will not suffer indefinite postponement.

Classical probems of synchronization

- The Bounded Buffer Problem (also called the The Producer-Consumer Problem)

- The Readers-Writers Problem

- The Dining Philosophers Problem

The Bounded Buffer Problem

- a buffer which can store n items

- a producer process which creates the items (1 at a time)

- a consumer process which processes them (1 at a time)

A consumer cannot consume unless there is at least one produced item.

Semaphore empty=N, full=0, mutex=1;

process producer {

while (true) {

empty.acquire();

mutex.acquire();

// produce

mutex.release();

full.release();

}

}

process consumer {

while (true) {

full.acquire();

mutex.acquire();

// consume

mutex.release();

empty.release();

}The semaphore mutex provides mutual exclusion for access to the buffer.

What are the semaphores empty and full used for?

The Readers-Writers Problem

Each process is classified as either a reader or writer.

Multiple readers may access the file simultaneously.

A writer must have exclusive access (i.e., cannot share with either a reader or another writer).

A solution gives priority to either readers or writers.

- readers' priority: no reader is kept waiting unless a writer has already obtained permission to access the database

- writers' priority: if a writer is waiting to access the database, no new readers can start reading

- in the readers' priority version, writers may starve

- in the writers' priority version, readers may starve

Semaphore mutex = 1;

Semaphore db = 1;

int readerCount = 0;

process writer {

db.acquire();

// write

db.release();

}

process reader {

// protecting readerCount

mutex.acquire();

++readerCount;

if (readerCount == 1)

db.acquire();

mutex.release();

// read

// protecting readerCount

mutex.acquire();

--readerCount;

if (readerCount == 0)

db.release;

mutex.release();

}readerCount is a <cs> over which we must maintain control and we use mutex to do so.

Self-study: address starvation in this solution, and then develop a writers' priority semaphore solution, and then address starvation in it.

The Dining Philosophers Problem

The dining philosophers problems is an example of a large class or concurrency control problems; it is a simple representation of the need to allocate several resources among several processes in a deadlock-free and starvation-free manner.

A semaphore solution:

// represent each chopstick with a semaphore

Semaphore chopstick[] = new Semaphore[5]; // all = 1 initially

process philosopher_i {

while (true) {

// pick up left chopstick

chopstick[i].acquire();

// pick up right chopstick

chopstick[(i+1) % 5].acquire();

// eat

// put down left chopstick

chopstick[i].release();

// put down right chopstick

chopstick[(i+1) % 5].release();

// think

}

}This solution guarantees no two neighboring philosophers eat simultaneously, but has the possibility of creating a deadlock (how so?).

Self-study: develop a deadlock-free semaphore solution.

Play the what if scenario game with all of these problems.

Uses of semaphores

Semaphores can be used for more than simply protecting a

critical section.

- protecting acccess to a critical section (e.g., db in the R/W problem)

- as a mutex lock (e.g., to protect the shared variables used in solving a probem such as readerCount above)

- to protect a relationship (e.g., empty and full as used in the P/C problem)

Nice notes

ReplyDelete